How to Find Where, Exactly, the Problem Lies

Feb. 9th, 2026 08:00 am

As always, thanks for using my Amazon Affiliate links (US, UK, Canada), and for considering joining my Patreon. As an Amazon Associate, I earn from qualifying purchases.

police band

Feb. 8th, 2026 11:24 pmI honestly didn't want to go, since that sort of thing doesn't interest me much, and I felt like I had more important things to do, but Cindy wanted to support her friends. The band did medleys of songs by Journey, Meat Loaf, Billy Joel and The Beatles. Let me tell you, as a huge fan of rock music, there are few bands Cindy likes less than Journey and Meat Loaf. After the intermission, they did three tunes as a jazz ensemble. This was more my thing, and I really liked the first one in particular. Too bad the two women behind me were chattering and laughing loudly.

The show was free and we had free parking, three blocks away at my office. It was 20°F, which doesn't seem like it should be very cold, but it was cold!

See all the angles of Cadillac’s 2026 livery

Feb. 9th, 2026 04:33 amCadillac reveal their debut livery for 2026 F1 season

Feb. 9th, 2026 04:33 am引き出しイリュージョン。Drawer illusion.

Feb. 8th, 2026 11:00 pm08feb2026

Feb. 8th, 2026 06:03 pmBest gas masks, according to The Verge. “The Verge consulted journalists who covered the Portland protests in 2020, where federal and local forces regularly used tear gas against protesters over the course of four months.”

BLUE is an acronym for Build Language User Extensible. It is a self-hosting build-system fully written in Guile.

Tiny Model Checker: Systematic concurrency exploration in a few lines of Scheme, by db7.

Penrose, “Create beautiful diagrams just by typing notation in plain text.”

Cryptography 30 years apart: Ascon on an HP-16C

JSONata is a lightweight query and transformation language for JSON data.

Hel, a Helix Emulation Layer for Emacs.

Reactive jQuery for Spaghetti-fied Legacy Codebases (or When You Can’t Have Nice Things) (2020), by Meredith Matthews.

Koyaanisqatsi In Five Minutes, by Wyatt Hodgson.

Bye Bi-Centenary

Feb. 8th, 2026 10:01 amDo we celebrate 200th year anniversaries? Last year's Jane Austen 250 was a blast, so I turned an excited expectant face to my beloved Mr Walker and asked if there were any rumours of exhibition for the bicentenary of the Pre-Raphaelite Brotherhood that will be occurring in 2027 (Holman Hunt), 2028 (Rossetti) and 2029 (Millais). He gave me his long suffering look (which I am very familiar with) and said words to the effect of 'What do you think?'

Before anyone points out the obvious, I am very well aware that time/money/resources/money/logistics/ money and money are the main problems here and even Mr Walker had to agree that if the government gave a lovely big sack of cash over to the museums of this land and said 'have at it,' getting a retrospective of Homan Hunt/Rossetti/Millais or anyone else would be possible. A lot of work but possible. All this led me to wondering about 100 years ago and the sort of fuss that went on for the centenary of the PRBs...

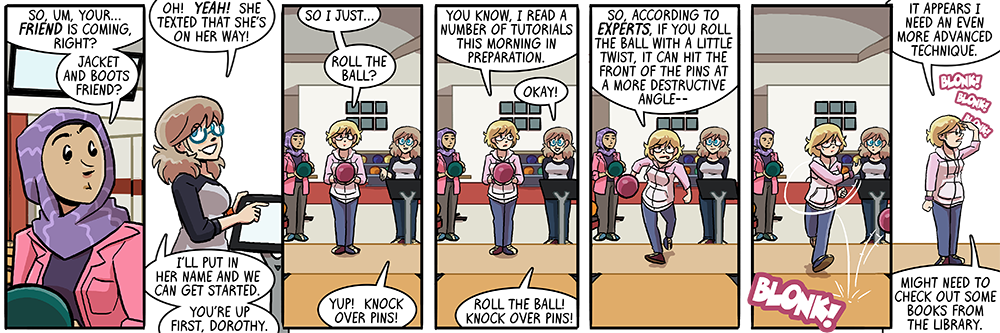

Another thing that got me wondering was coming across this cartoon (by E H Shepherd of Winnie the Pooh fame) which was in the newspapers in response to the very popular William Morris centenary exhibition in 1934 at the V&A. Morris was still known and popular, with thousands of newspaper article about him which only increased in 1934 when everyone was talking about him. There were special editions of book reviews about his literary output, plus new biographies about him. The exhibition was opened by Stanley Baldwin (yes, I know, I will come to that in a moment), who was about to become Prime Minister again, so that got a lot of traction in the papers. Baldwin talked about how unique and amazing Morris was and he was declared a genius once more. Stanley Baldwin was, of course, the nephew of Morris's best mate, which doesn't hurt and draws on an essential point - Centenaries only work when people are around to remember you.

Let's start with Holman Hunt as the oldest of the main PRB trio, therefore his centenary appeared in 1927. I must admit I thought HH was going to be the one with the slimmest newspaper coverage, but I had not counted on the power of a widow. Fanny Holman Hunt (sister of the first wife Edith - he married them alphabetically which is handy) had an open house and told stories of her husband to members of the Londoners' Circle who did some sort of Holman Hunt pilgrimage and she told a special story about this painting...

|

| Christ and the Marys (1847-c.1900) William Holman Hunt |

Hunt apparently hated his weird palm tree (he had never seen a palm tree at that point) so much he turned it to the wall and refused to look at it for decades until finally finishing it in old age. The papers also latched onto the craze for The Light of the World that had gripped the world when it went on tour to the Empire in 1905 to 1907, drawing massive crowds. This would have been within living memory for many of the readers, and of course Hunt died in 1910, so his centenary was less than 20 years later. He would have been very much remembered.

|

| Morning Music (1867) Dante Gabriel Rossetti |

I put money on Rossetti being the one that drew the most newspaper coverage for his centenary in 1928. I was very much wrong. Firstly, I fully appreciated the people of Sheffield claiming Elizabeth Siddal as a 'Sheffield Girl' (Sheffield Daily Telegraph 16 & 24 May 1928) - how fitting that Rossetti's special year was made in part about how amazing Miss Siddal was. Hastings also had a celebration at St Clement's Church (where he married the Sid). Birmingham Museum and Art Gallery had a 'small' centenary exhibition, drawn from their own collections with two exceptions - they borrowed Morning Music from Mrs J R Holliday (wife of James Richardson Holliday, although they apparently gave the work to Birmingham in 1927) and a chalk sketch of a figure from Dante's Dream which was borrowed from Misses Ethel and Helen Colman (of the mustard family) of Carrow Abbey. The sisters also owned the finished oil (now in Dundee) which they would have lent as well but it was so huge no-one could work out how to move it safely.

|

| Dante's Dream on the Day of the Death of Beatrice (1880) Dante Gabriel Rossetti |

The Daily Express published a very interesting piece about the lack of 'Rossetti Girls' in society in 1928. Apparently, you could go to all manner of parties in Chelsea these days and never meet 'one tall woman with great weary eyes, the butterfly mouth and hollow cheeks which the Pre-Raphaelite idea demanded' - well, quite. Apparently, women are all unnatural energetic these days and refuse to be languid. I hope you are all ashamed of yourselves.

I think some of the confusion over the Rossetti centenary is that his poetry was not really fashionable and his painting was overshadowed by his love-life which was neither spicy enough for the tabloids or romantic enough for the academics to be of great interest. Also, people kept making it about other people - not only Elizabeth (well done Sheffield) but also Frederic Shields, Walter Deverell, not to mention bloody Hall Caine who popped back up like a cold sore, in case we forgot who loved Rossetti the most. So many of the little articles seem to begin 'the centenary of Rossetti's birth reminds me of this entirely other person.' Sorry Rossetti, you will have to wait until everyone thinks you are the most important one...

|

| Just Wake or Waking (1865) John Everett Millais |

Millais's hundredth birthday the year after obviously benefitted from the heightened awareness the previous two brought. He had already been mentioned multiple times in the retellings of the PRB origin stories. Millais, like Hunt, also benefitted from surviving children, with his daughter placing a red rose on his statue in Tate Britain's garden. Birmingham also held a centenary exhibition (well done Brum) throughout June. Southampton claimed him as a son of a city (born round the corner from TK Maxx) and Christie's sold Just Awake (now known as Waking) complete with a rather high profile visit from Mary Millais (1860-1944), the model (aged 5). Despite the extra publicity and an expected price of two thousand guineas, it only made five hundred.

|

| John Ruskin (1853-1854) John Everett Millais |

Scotland claimed Millais, fighting off the claims of Southampton and Jersey and the Sphere had a double-page retrospective of his life, work and Presidency of the Royal Academy. It had a picture of Holman Hunt and a picture of Ruskin, but no picture of Rossetti, which is telling. Very little of the press coverage was about his marriage and the surrounding scandal, with only the Sphere mentioning Ruskin at all. I wonder if that was because, like Hunt, the children and grandchildren were around to steer conversations? On that note, Esme Millais, granddaughter of the artist got engaged in the same year (excellent timing) and received far more publicity than it possibly warranted.

Going back to the cartoon, the amount of publicity around Morris's centenary in 1934 surprised me. May Morris was obviously still with us (as was Jenny) and he was declared 'the greatest of Victorians' in the Salisbury Times which is a bold assertion.

|

| Clerk Saunders (1861) Edward Burne-Jones |

The one that shocked me most was Edward Burne-Jones. 1933 should have been his year, especially after the roll over of the PRB where he was sometimes mentioned but no. The Saturday Review started their piece on him with "Anything to do with Camelot makes me sick," quoting a young person in response to poor old Ned. Wales claimed Burne-Jones, with the Western Mail declaring he was the greatest of all Welsh artists which is a bold move for a chap from Birmingham. They also claimed King Arthur and Camelot was Welsh too, so there's that. Stanley Baldwin also opened a centenary exhibition at the Tate for his uncle, declaring that modern life could be so vulgar and ugly, we all need a bit of beauty. Gwen Reverat (Granddaughter of Charles Darwin) referred to the 'childishness of Burne-Jones ideal' in her review. Ouch.

|

| Love and the Pilgrim (1896-7) Edward Burne-Jones |

Possibly reflective of a general lack of interest, when Love and the Pilgrim sold in March of 1933, it only made £210, as opposed to almost £6k it had made in 1898. The Truth pondered what the problem was, as you got value for money in size (10 feet by 5) but concluded that slumps come to us all, and probably no-one would want to buy a Burne-Jones for many a year to come...

So, going by my very unscientific newspaper-extrapolation of information, I was surprised at the levels of interest and attitude on both ends of the scale. I was aware that by the late 1920s, love for the Pre-Raphs was not going to be at a pinnacle, but I did not see the dislike for Burne-Jones on my bingo card. The people giving the reviews were artists (because the Guardian's Jonathan Jones was yet to be born/fashioned in the darkened cave devoid of joy and whimsy) with both Gwen Reverat and Robert Anning Bell not exactly raving about Ned's angels and knights. Starting your review quoting someone who is nauseated by Burne-Jones is an exciting stance. I wasn't surprised by the low interest in Holman Hunt, although the coverage he got was positive. Millais' coverage was respectful and quite family orientated. Rossetti's was scatter-gun and about lots of other people. Morris was triumphant. People's love for him, his genius, his contribution to the world, made the press coverage positive and weighty. Burne-Jones got less than 400 mentions in his year, Morris got almost 2.5K; the only other one near him was Millais with 1.6K, and that included his granddaughter's wedding announcement. Morris went last, but I don't think he benefitted from that because surely Burne-Jones would have had more too, but I wonder if Burne-Jones and Millais suffered at all from their bad auction results? Despite Millais otherwise positive year, there was a bit of niggling over the drop in auction result, despite the visit from the model.

Next year is Holman Hunt's bi-centenary and I am fairly certain no-one has a big retrospective planned (please correct me!) and in fact, of all the Pre-Raphaelite and adjacent artists who should have one, I don't think I've ever attended a purely HH show. Following on from that, I have heard rumours for Rossetti, but nothing for Millais. Possibly Morris and Burne-Jones stand a better chance as there are public bodies who have sizeable collections of their works to form a basis for an exhibition. Herein is the problem - exhibitions are expensive (yes, Mr Walker, I am listening) and the sheer expense of loans and getting them to you is a problem. While I argue that such exhibitions would be popular, my sensible husband points out the logistical nightmare that has to be balanced, and that is nationally speaking. When those works of art are now spread over the world, that is yet another layer. Which brings me to Jane Austen...

|

| 250th logo from the Austen Centre |

Last year was Austen's 250th anniversary of her birth and didn't we all know about it? Ever the queen of transmedia, I saw ads for tea-towels, tea=cups, special editions of her novels, showings of the films, walking tours of Bath etc and numerous small exhibitions. She managed full coverage which will no doubt continue as we have yet another Pride and Prejudice on Netflix and The Other Bennet Sister coming to the BBC this year. Maybe this is the model we need for our Pre-Raphs. There was no big exhibition (British Library had a small display) but she was omnipresent in an impressive way. So how about some documentaries, showings of The Love School and Dante's Inferno? Small exhibitions all over the place together with tea-towels and t-shirts. Walking tours of graveyards and riverbanks. I'm up for it all. Jane Austen has shown us that maybe we don't need a blockbuster to make an impact.

So, let's get planning.